Machine Learning

Everyone is into LLMs right now, ~48 billion of investment went into AI/ML in 2023 alone because of the hype surrounding ChatGPT and similar products. Even more investment going into it this year if the first quarter is any indication. AI/ML is important, even if you’re tired of the snake oil you were sold in the past on ML/AI and were tired of hearing about it before the hype cycle hit, if you’re in the security space at all, you should care because your business or customers care and they’re using it, spending large amounts of money on it and its directly touching your most valuable data (because that’s what the business is training them on). Just when I thought Nvidia stock/profits couldn’t go any higher after increased demand for GPUs because of the crypto hype cycle which was somewhat waning, the AI hype cycle hits and now Nvidia can print money as fast as they can ship silicon, with no evidence of supply meeting demand any time soon.

While LLMs like ChatGPT are getting all the attention, they are not the main technology getting a lot of investment in most businesses. The more traditional models of AI/ML are what most businesses are still using, and while there’s been some internal rebranding, it is still largely being managed by “data scientists” who may have suddenly rebranded themselves as AI experts, because, well, follow the money? Their department will get more money, and they in turn will get higher salaries. Can you blame them?

All that to say, you need to be taking a look at a wider scope than just LLMs when educating yourself on AI/ML. Much of it has been around for a long time, and ChatGPT integrates a lot of these more traditional models that have been around for a while (such as image recognition) into itself. It’s very likely many AIs we will use in the future we’ll involve the interaction of multiple models, optimized for a different function, to help complete tasks and simulate human abilities.

The first thing I’d like to get out of the way is that learning about machine learning does not require learning about the math mentioned above. While it is cool to learn about perceptrons and how they mathematically represent the function of a biological neuron, it’s not entirely necessary to learn about the various models and call well-established libraries and tools to train and create your models. The course at fast.ai has this exact premise in mind when teaching you how to train your models and how ML/AI works. If you want to see what you can do today, without even taking a course, check out the [OffSec ML Playbook for plenty of examples of attacks you can do with almost no prior knowledge of ML, or using attacks in the same wheelhouse as ones you’ve seen for other environments.

On overfitting

One of the concepts you learn about when training an AI model, particularly in supervised learning, is the concept of overfitting. Another way of putting it is when it comes to training ML models, perfect, is the enemy of the good. You can train a model so well that it can reach a 100% accuracy rate with its training data. The problem is anything outside of that training data set doesn’t perfectly match it, or its exact parameters or characteristics even slightly it no longer recognizes it. A lot of this can be the context surrounding the data. In Chapter 2 of [Not with a Bug, But With a Sticker, the book talks about how image recognition models trained on the ImageNet dataset would misrecognize objects outside of the dataset. One example was a butterfly, usually the images of butterflies it was trained on were in the context of nature (ex: butterfly on a flower). The nature of ML models is such that they select for non-intuitive characteristics during training, in this case, it could easily recognize a butterfly on a flower, but put one on a car, or dog, or concrete wall, and suddenly the ML model didn’t label it as a butterfly. Similarly, the characteristics it might be “fixated” on when put in an image that looks unrecognizable to humans or just a different but valid object entirely can fool the model into thinking it is something it was trained on even though a human or even small animal would not make the same mischaracterization.

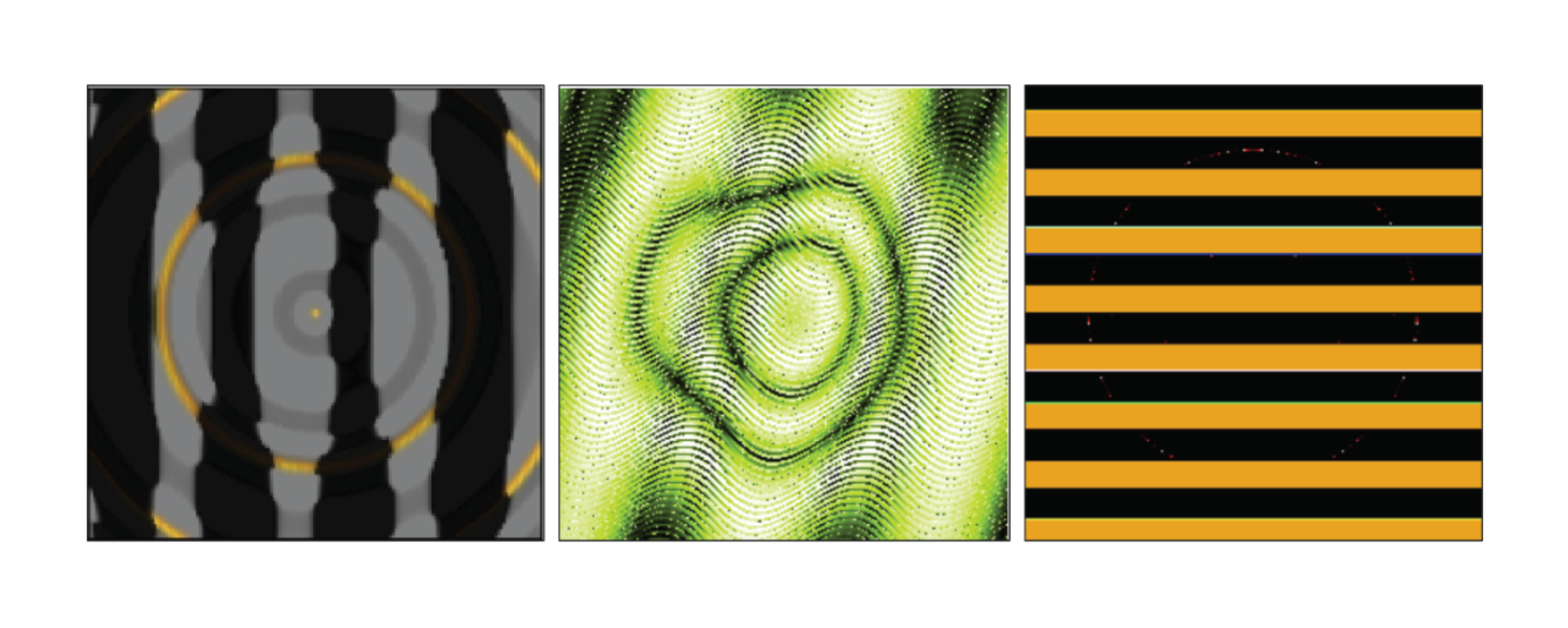

Credit: Anh Nguyen

Credit: Anh Nguyen

One such example, is the image above would fool certain image recognition models into seeing a penguin, a snake, and a school bus. This isn’t always a matter of overfitting but just the way an ML model learns to recognize an object may be completely different from the way we’d describe an object’s characteristics. This unusual feature specificity extends to other things as well. Think about malware, and ML models trained to detect malware. intuitively, you would assume an ML model trained to recognize malware or variations of existing malware would look at certain “magic” bytes, certain system calls being made that normal programs don’t make, or don’t make in the same way, high entropy (encrypted payloads), and other aspects sort of all combine to score whether it would rate as suspicious or not.

However, I’ve heard from those doing research in this space that the ML models tune on things that are innocuous like timestamp (given most malware in the catalog was created/built after a certain date, anything before that date would not meet the criteria for the malware it trained on), and weight it heavily. So as a malware developer, you can simply change to time stamp to be from the 90’s or early 00s or something, and suddenly the model weights it so heavily that it no longer finds what a DFIR might see as obvious malware, suspicious at all.

All this to say the concept of overfitting as well as parameter tuning/choice is not just a data science issue. It’s also a vector for adversarial attacks. Figure out what those parameters in the model are important and then use them to either avoid matching the model (in the case of malware evasion) or getting a false match/classification. It’s important to note you can figure out how a model works and which parameters are important, simply by interacting with it. Many models have APIs and little stopping you from querying it enough times to figure out how it works.

If you want more practical examples of how these kinds of attacks work, or want to try them for yourself, a friend of mine, Jan Nunez is releasing something soon which you can keep an eye on https://hackthesaucer.com which should debut at CackalackyCon in May. The target audience is students (high school/college) so don’t feel daunted by it. You don’t need even a graduate-level class to do this.

NYU Machine Learning

Speaking of graduate-level classes on Machine Learning, I’ve been taking a course on machine learning at NYU as part of a master’s program at NYU’s Tandon School of Engineering. I’m able to do this through a special fellowship program offered to professionals and my work’s education incentives cover the costs. I have started this program in place of certifications (aside from Certified Kubernetes Administrator and Certified Kubernetes Security Specialist). I figure for the same effort I can have a master’s degree in my field in around 3 years. This is my second year and I’ve enjoyed it though being in the field so long a lot of the material is already familiar to me and not very challenging.

This has not been the case for my machine learning course. The course material has been rigorous. It’s been heavy on math, for which I’ve had to educate myself a bit on the various math symbols and LaTeX for keeping math equations in my Obsidian Notes for the course. Thankfully, thanks to my extensive experience at this point in programming. I can parse the various formulas and algorithms fairly well as it’s just programming with a symbolic language (similar to hieroglyphics). Once I kind of understand or can map the symbols to various programming functions, I can follow the thought process.

All that said, it is still a lot to digest. My weeks consist of fairly intense study and note-taking. In the interest of learning a subject by explaining or writing about it, I’ll likely be making more posts about it in the future.